Let’s be honest — the moment you hear “p-value,” “alpha,” or “significance level” in psychology stats class, your brain either freezes… or quietly cries.

Most students think: “Is this math again?”, “Why is this even in psychology?”, or “What does this even mean and why does it sound so judgmental?”. Once you get the basic logic, it’s actually not that scary. So let’s break it down — simply, and in your language.

Let’s start from the beginning — understanding things step by step, so we know why concepts like p-value, alpha, and significance are even used in the first place, and when they actually come into play.

Usecase – Let’s Set the Foundation Before We Dive In

Let’s say you got into a good college. You might wonder: “Did I get this seat because I deserved it — my marks, efforts, and profile — or did I just get lucky?” This presents a question, which we divide into two parts: the null hypothesis H0 and the research hypothesis H1. The null hypothesis here would be: “You got in by chance — not because of anything special.” The research hypothesis is what you think might be the answer, which is that it happened because of my skill and talent. Researchers start by assuming the null hypothesis (the opposite statement to what you believe in) is true because if we manage to reject it using research data at the end, then what we believe is more likely to be true. This logic applies not just to college seats, but in research as well. Terms like alpha, p-value, and significance are part of this process. Let’s explore the logic step by step with clear real-life examples.

First comes Alpha

In any research, the researcher sets an alpha value beforehand — usually 5% because it strikes a nice balance (also more reasons are given below) — to let readers, reviewers, and other researchers know how strict they’re going to be while judging the results. It’s like setting a cutoff point to decide what’s good enough and what is not.

For example:

Let’s say at the end of this blog you feel like you’ve understood the concepts really well. Then you Think of taking a quiz with 5 multiple-choice questions to test your Understaing.

Before taking the quiz, you mentally set a rule:

“If there’s more than a 5% chance that I got these answers right just by guessing, I won’t believe I’ve truly understood.” – which means I wont allow more than 5% guesswork in my research and still call it trust worthy.

Example:

I attempt 20 MCQs and decide to set my strictness level (alpha) at 5% — meaning I’ll allow only a small chance that I got lucky.

- 5% of 20 questions = 1 question

So I’m saying:

“If more than 1 of my answers feels like a lucky guess, I won’t fully trust that I’ve understood the topic.” – that is super strict, right? And that’s how researchers want to conduct their research with little or no room for chance, guesswork, or error.

Lets look at some more points to keep in mind before we finish understanding alpha.

- Alpha is set before the research begins

It’s a pre-decided rule, not something you adjust after seeing the results. This avoids bias. - Researchers usually set the alpha level at 5% (0.05) as a general rule of thumb to make sure results that are worth it are considered and trusted. You might wonder why 5% and not any other number. It’s because of two reasons:

- Higher Alpha results in Type I error – A Type I error happens when you wrongly believe your result is meaningful. If you set a higher alpha, like 10% or more, it becomes easier for random or weak results to bleed into data that is significant. That means you might treat something as unimportant, important. The higher the alpha, the more likely is for this kind of mistake can happen.

- Alpha lower than 5% results in Type II error – This happens when you think there’s nothing there, but there actually is. Because your cutoff is so strict, you might miss real results and wrongly say they’re not significant. For example, imagine you study well and take a test, but you decide you’ll only allow yourself 1 mistake( alpha – to strict). When the results come and you made 2 mistakes, you might wrongly think, “I didn’t understand the topic,” even though you did pretty well. Because your rule was too strict, you missed a result that was actually good.

- In short, if you reduce one type of error, the other tends to increase — so researchers set alpha at a balanced point (like 5%) to reduce the risk of both Type I and Type II errors.

Significance

Significance means the result is strong enough to trust — it happens when the p-value is smaller than alpha. Like alpha, it’s set before looking at the data to help us decide what counts as “important.” If a result has very little chance of happening by fluke, and it’s below alpha, we call it significant. Alpha and significance work together, and in most cases, they mean the same thing or are used interchangeably. Both alpha and significance work together to determine when we can reject the null hypothesis. In most cases, over 99% of the time, alpha and significance levels are identical.

There’s no fancy word for when the p-value is higher than alpha — we just say the result is insignificant (or in student terms, “bad results,” haha).

We call something significant because, “Aise result ke aane ki sambhavana chance se bahut kam thi, par fir bhi woh result aaya.— and that makes it special. For example:

You learn a topic and take a test to check your understanding.

You get 99% of the answers right — which is super rare just by luck.

Because the chances of that happening randomly are so low,

we say the result is statistically significant —

You probably didn’t just get lucky — you actually understood it.

Also, just because something is significant, it doesn’t measure practical importance – some things can be useful/important/improved/better, but still not strong/big/sufficient enough to really count or make a difference.

Practical importance Explained

You might read this blog and feel like you’ve understood the topic — maybe you even get a few answers right on an MCQ paper (great start!).

But does that understanding help you answer tougher questions in a competitive exam?

Or give you clarity on how to apply it in research or real life?

If not, then your learning is just showing improvemnet — but not enough to yet be practically valuable.

Understanding P-Value: Your Alpha Companion

P-value by itself doesn’t mean much.

It becomes meaningful only when compared to alpha.

Without alpha, you don’t know what counts as “too much chance” —

so setting alpha gives you a clear line to judge if your result is significant or just random.

lets take the same example with and without alpha to see how it affects P-value :

With Alpha:

You attempt 20 MCQs and set your alpha at 5% —

which means you’re okay with 1 answer being right by luck (5% of 20 = 1).

You get 18 correct and feel confident —

but then you realise 2 of your answers were guesses.

That’s more than the 1-fluke limit you allowed.

So now, based on the standard you set,

you can’t fully trust your performance as true understanding.

It’s still good — but not strong enough by your own strict rule.

Without Alpha:

You attempt 20 MCQs and get 18 right.

You feel good — but you’re not sure how much of it was luck vs real understanding.

You have no clear rule or line to judge this by.

So, even though the score looks strong,

there’s no way to say for sure whether to trust the result or not.

It stays in a grey zone — leaving you guessing.

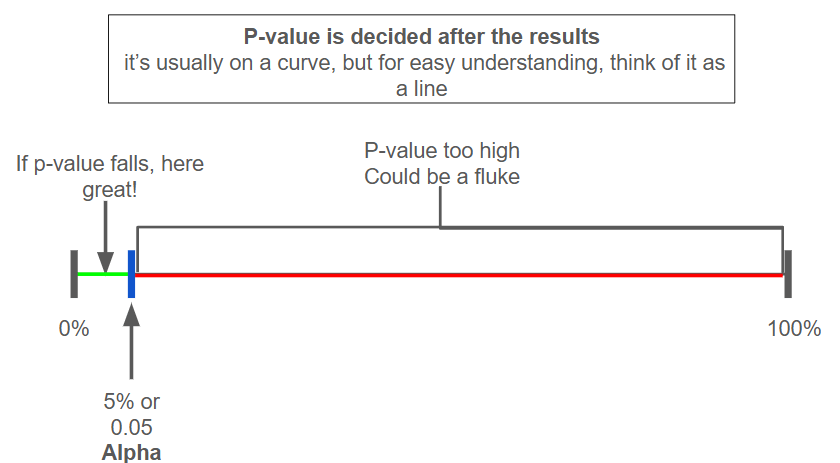

So , A low p-value means the result is probably not by chance — it’s more likely to be meaningful when lower than alpha on a scale .

To paint a better picture

First step: Problem statment or Question is stated

Second step: Split the Problem statement into two – Hypothesis H1 (what you believe) and the Null Hypothesis H0 (the opposite of H1). The null hypothesis is the statement we aim to prove wrong, so that the solution becomes H1( not forcefully tho).

Third Step comes Alpha – The rule of strictness

It’s the limit we set beforehand to decide how much chance we’re willing to accept before trusting a result.Fourth Step Significance is evaluated – jWhen p-value is smaller than alpha

If the p-value falls below alpha, the result is considered statistically significant, meaning it’s unlikely to have happened by chance.Fifth P-value is decided – how rare the result’s probability is?

But on its own, it doesn’t mean much — it only becomes meaningful when compared to alpha.

Here are some questions for you to solve

1. What does a p-value less than 0.05 typically indicate?

(A) The null hypothesis is true

(B) The result is statistically significant

(C) The test was not valid

(D) The sample size is too small

2. What does the significance level (alpha) represent in hypothesis testing?

(A) The chance of accepting a false null hypothesis

(B) The power of the test

(C) The threshold for calling a result significant

(D) The sample error

3. If alpha is set at 0.01, what does it say about the researcher’s decision rule?

(A) They’re willing to accept a 1% chance of Type I error

(B) They’re okay with missing real results

(C) Their study is underpowered

(D) The test is non-parametric

4. Which of the following combinations will lead to rejecting the null hypothesis?

(A) p-value > alpha

(B) p-value = alpha

(C) p-value < alpha

(D) p-value = 1

5. What is most likely to increase if alpha is made too low (e.g., 0.001)?

(A) Type I error

(B) Type II error

(C) Statistical power

(D) Sample size

6. Which statement is TRUE about statistical significance?

(A) It guarantees the result is practically important

(B) It proves the null hypothesis is false

(C) It depends on the p-value being below the significance level

(D) It only occurs when alpha is 0.10

Answers:- B, C, A, C, B, C

If you liked the explanation and found it useful, remember to get in touch with Powerwithin. As a fresher to a student and now an intern, I truly believe this community provides support and structure in every possible way.

Leave a Reply